Introducing Synthefy Migas 1.0: State-of-the-Art Forecasting on Your Unique Data, in Minutes

Synthefy's Migas 1.0 Mixture-of-Experts time series forecasting model achieves top rankings on GIFT-Eval benchmark and delivers state-of-the-art results on unseen datasets.

We're introducing Migas 1.0, our Mixture-of-Experts time series forecasting model, which achieves one of the highest ranks on the leading time series forecasting benchmark GIFT-Eval. And yes, we named it after a taco.

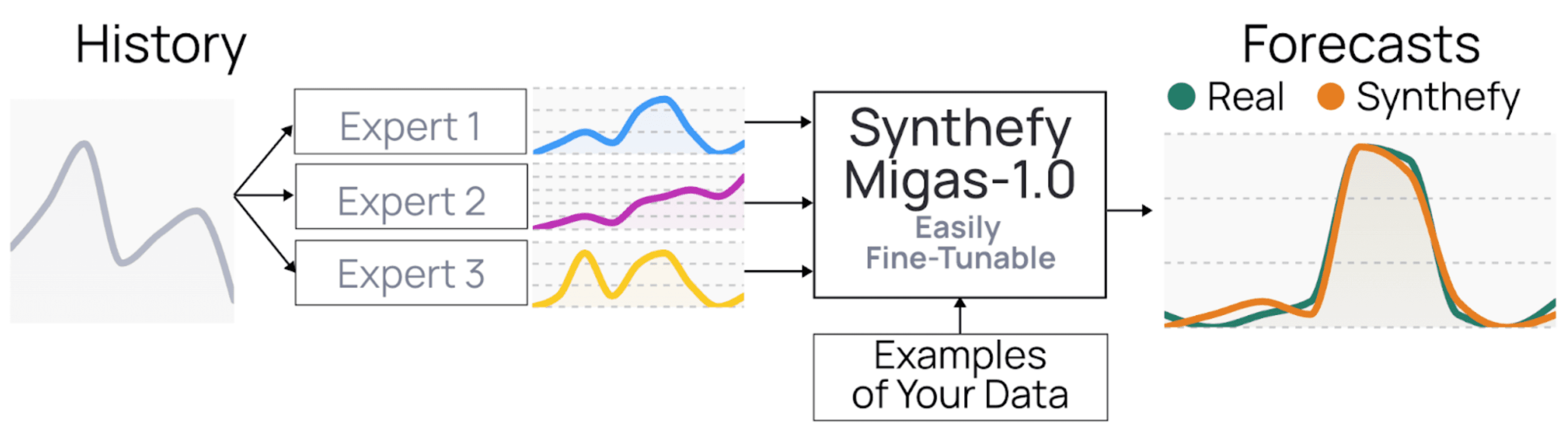

Figure 1: Synthefy's Migas 1.0 uses a Mixture‑of‑Experts approach that can fine‑tune on your data within minutes and outperform all available open‑source forecasting models. This allows it to deliver state‑of‑the‑art results on leading time‑series benchmarks and unseen datasets.

Timeseries Foundation Models Are Not Diverse Enough

In the past year, several major AI research labs have released what they call timeseries foundation models (Google's TimesFM 2.5, Amazon's Chronos 2, Salesforce's Moirai 2, and Datadog's Toto). These models are trained on massive collections of timeseries data spanning a variety of domains, and claim to provide strong zero-shot forecasting capabilities.

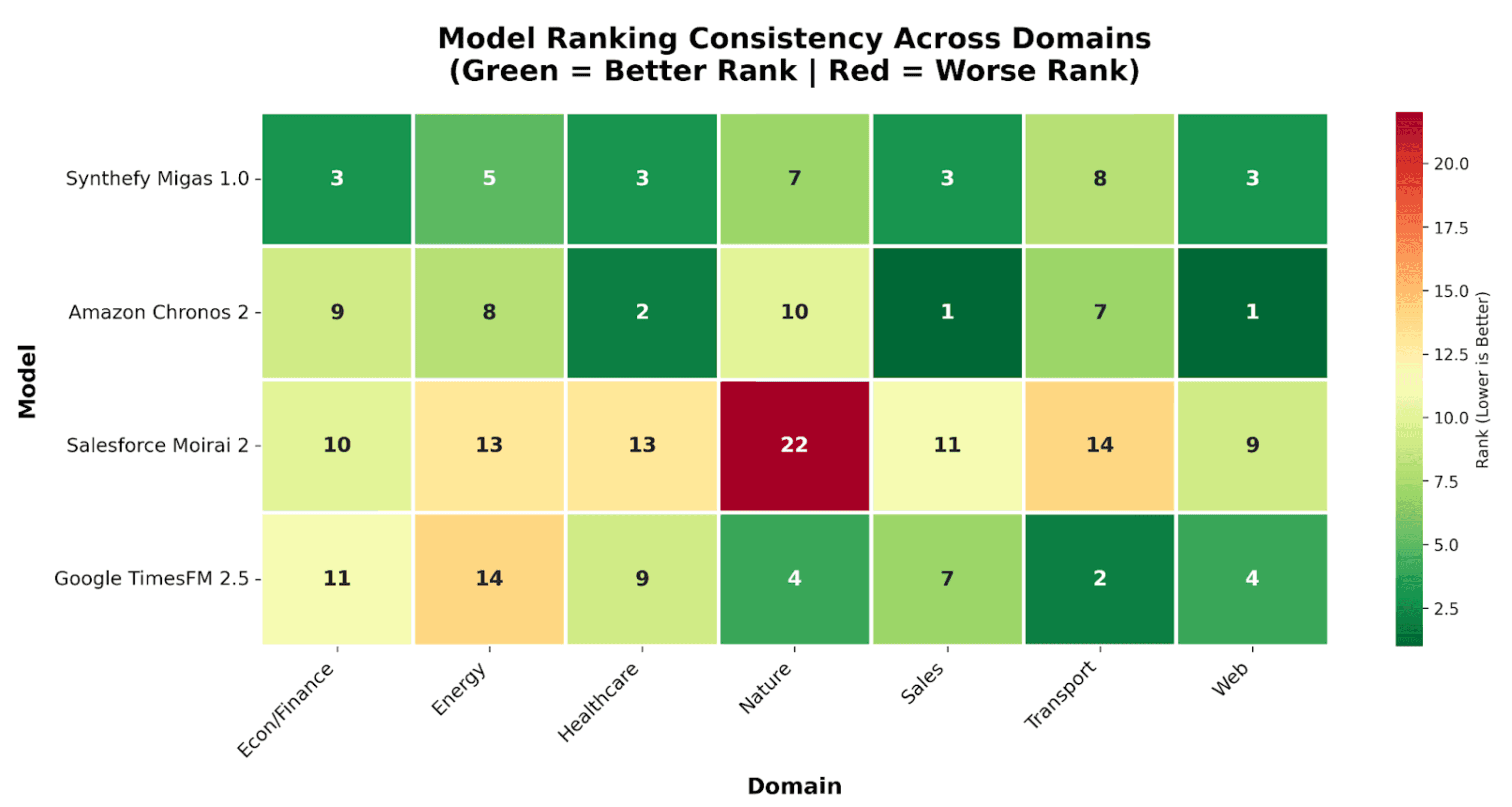

The "foundation" label suggests that these models generalize broadly across different types of data. In practice, we found that's not quite the case. Each model is biased toward the characteristics of its training distribution, rather than any universal structure of timeseries. As a result, performance can fluctuate drastically in evaluation on out-of-distribution examples. Chronos 2, for instance, tends to favor multivariate inputs where cross-series dependencies are clear, while TimesFM 2.5, Moirai 2, and Toto each lean toward the data domains they were trained on: web-scale behavioral signals, business metrics, and observability traces, respectively. These biases often translate to wild swings in zero-shot accuracy when faced with unfamiliar or structurally different data.

When we evaluated these models, we found that aggregate metrics can be misleading. While overall accuracy numbers tell one story, at the level of individual datasets and samples, performance differences are often dramatic.

Figure 2: Performance of Timeseries "Foundation" Models varies wildly across domains. Synthefy's Mixture-of-Experts (Migas 1.0) learns and leverages that variability to improve forecasting performance.

Synthefy Migas 1.0 - Turning Biases In Foundation Models Into Improved Performance

Let's be honest— nobody has access to a dataset diverse enough to train a truly zero-shot, universally robust timeseries model. Compared to the language domain, where models learn from internet-scale data with trillions of tokens, timeseries models are still orders of magnitude behind in data diversity.

At Synthefy, we turned that limitation into an opportunity. Instead of fighting against these biases, we decided to leverage them. Each foundation model has distinct strengths shaped by its pretraining data. By understanding those biases, we can make them work for specific forecasting scenarios rather than against them.

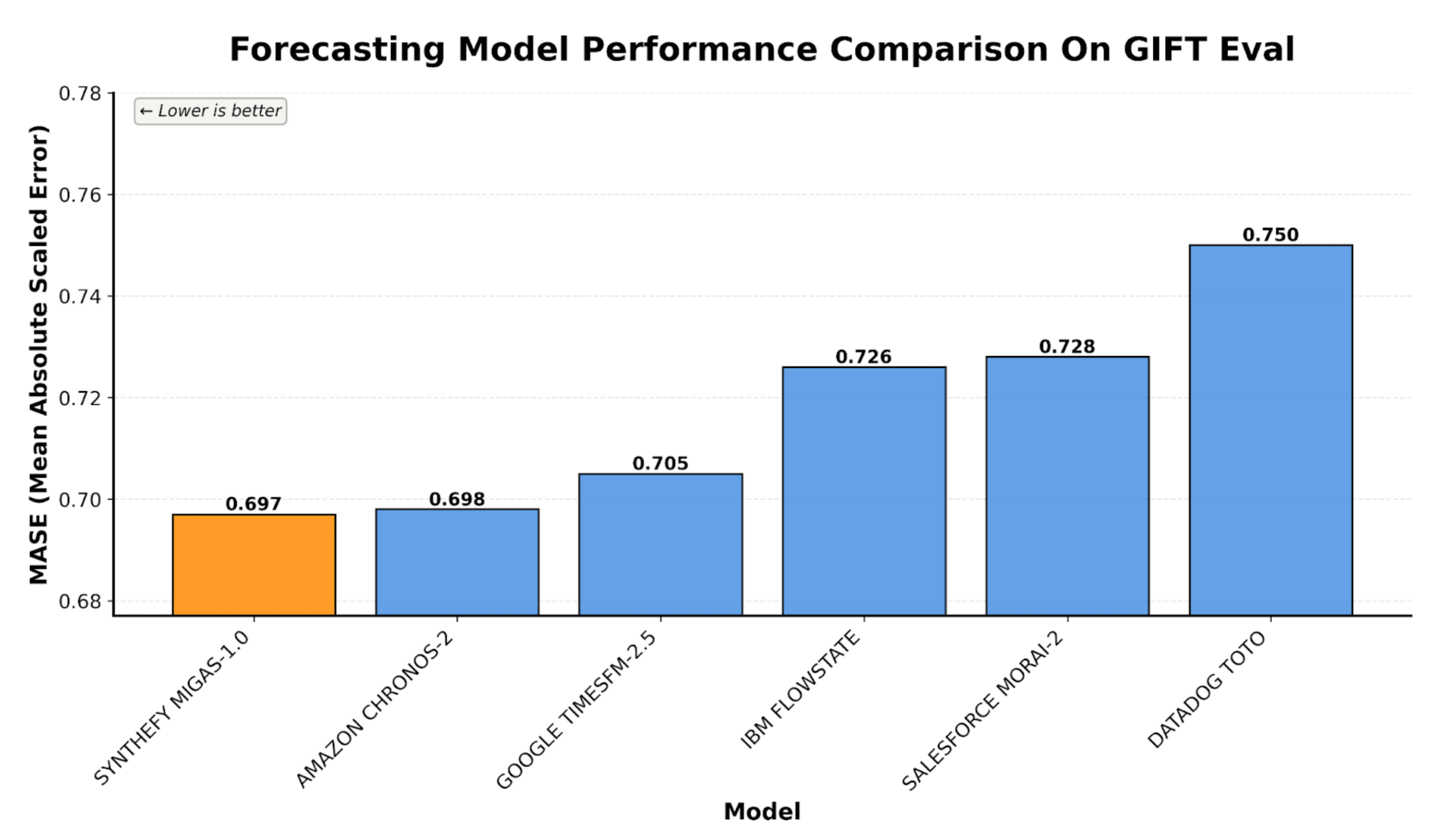

To do that, we built a lightweight Mixture-of-Experts (MoE) system: a 10-million-parameter neural network that learns how to combine foundation models intelligently using small amounts of fine-tuning data. With under a million training points and roughly an hour of training, our MoE achieves state-of-the-art performance on GIFT-Eval, proving that intelligently combining biased experts can outperform any single monolithic model. As our first generation MoE, Migas 1.0 uses only univariate experts. Notably, we outperform all our univariate experts by significant margins.

Most importantly, Migas 1.0 outperforms any forecasting model you might have internally and delivers the best forecasting results.

Figure 3: Synthefy's Migas 1.0 outperforms leading time series foundation models from major AI research labs. Notably, we outperform Chronos-2, the leading multivariate model, using only a mixture of univariate models.

Figure 4: Synthefy's Migas 1.0 ranks second overall in Mean Average Scaled Error (MASE) on GIFT Eval

Stop Using AutoARIMA and Start Using Migas?

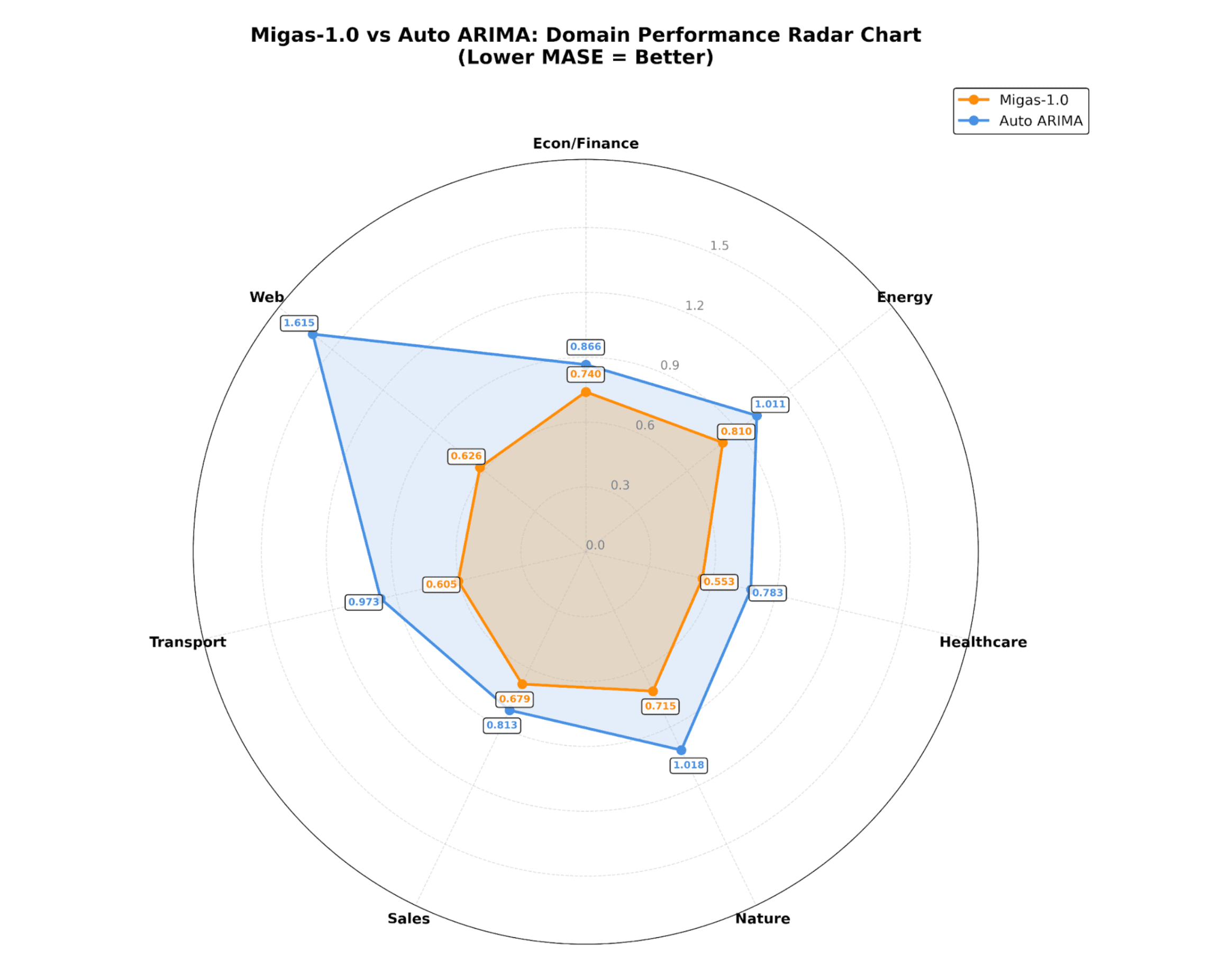

Figure 5: Across all domains in GIFT Eval, Migas 1.0 outperforms Auto ARIMA by considerable margins. If your forecasting pipeline still uses ARIMA methods, Synthefy offers big gains in accuracy.

Many enterprise and production workflows rely on statistical tools like AutoARIMA and Prophet. Here we compare Migas with AutoArima on diverse unseen datasets and show that it outperforms statistical models in diverse enterprise use cases.

Migas is Built for Your Unique Data

At its core, Migas isn't just a single model, it's a framework for adapting foundation models to your specific domain. This means that with minimal training data, Migas can be customized to reflect the unique dynamics of your business, system, or application, whether it's forecasting energy demand, financial metrics, sensor data, or anything else. Migas learns how your data differs from the experts' pretraining distributions and adjusts the mixture accordingly.

Customer Case Study: Real Impact in Minutes

A national retailer uploaded 12 months of SKU-level sales data and ran Migas 1.0 through the API.

Migas adapted to their distribution in ~45 minutes, reducing forecasting error by 22% and improving their revenue by 12%.

This is a typical outcome when Migas is able to learn the unique dynamics of your data and context.

In short, Migas gives you foundation-level performance, fine-tuned to your world — delivering forecasts that are not just accurate, but contextually aware and directly aligned with your data.

Try Migas in 30 Seconds and Fine-Tune in 30 Minutes

You can start forecasting with a version of Migas fine-tuned on GIFT Eval here - Forecasting Quick Start. Reach out for support fine-tuning Migas on your own datasets.

We're offering:

- Free API calls and fine-tuning credits to get quickly started

- No model training infrastructure required

- Private and secure deployment options available on AWS.

Apply For Access Here: https://console.synthefy.com/onboarding

Questions? Email us: contact@synthefy.com

Read some of our customer success stories to hear more about how Synthefy changes the game in retail, financial services, observability, networking, and more. We're excited to bring timeseries intelligence to you.